As of this writing, Assisted Installer is the easiest way to install an OpenShift cluster on a custom/bespoke infrastructure. You do not need to manually deal with ignition files or manually configure the OpenShift installer. You do not even need to manually set up a bootstrap node.

In this post, I will walk you through how to create an OpenShift cluster using Assisted Installer on a bespoke infrastructure. To host VMs, I am using Proxmox VE, a lightweight open-source virtualization product. However, similar steps should also apply to other hypervisor products. If you want to know how to install Proxmox, see my previous post at https://blog.rossbrigoli.com/2020/10/running-openshift-at-home-part-34.html

First, we need to set up the infrastructure. We need to create VMs and then do the following steps to configure our infrastructure.

- Create and configure a DNS server

- Configure the DHCP server of our router

- Create and configure an HAProxy load balancer

We will run the supporting services in a dedicated VM called openshift-services.

The first step is to create a VM that will host all of the services required by the cluster.

Creating the Virtual Machines

1. Download the CentOS Stream image from https://www.centos.org/centos-stream/ and upload it to Proxmox. You may also let Proxmox download the image directly from a URL.

Download the correct architecture that matches your VM host. In my case, my VMs have x86_64 CPUs.

Upload the image to the Proxmox's local storage as shown below.

2. Create a VM in Proxmox and name it "openshift-services".

3. Use the CentOS Stream ISO file as the DVD image to boot from.

4. Choose the disk size. 100GB should be enough but you can make it bigger as well. You don't need a lot of resources for this VM as well as it is only doing DNS name resolution and load balancing. So you can use 2 CPUs (or less) and 4GB of RAM.

5. Review the configuration and create the VM.

6. Start the VM and follow the CentOS Stream installer instructions. Then wait for the installation to complete.

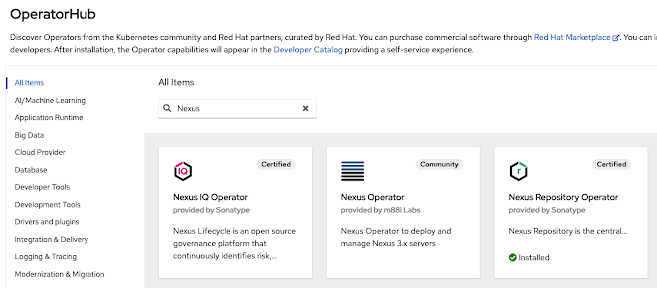

7. While waiting for the installation to finish, create the cluster from Red Hat Hybrid Console. Navigate to https://console.redhat.com > OpenShift, then click "Create Cluster".

If you don't have a Red Hat account yet, feel free to register for a Red Hat account, it's free.

8. Because we want to install OpenShift in our bespoke infrastructure, we need to choose the "Run it yourself" option and then the Platform Agnostic.

9. You will be presented with 3 installation options. Let's use the recommended option, Interactive, which is the easiest one.

10. Fill up the details according to the domain and cluster name you choose.

Take note that the cluster name will become part of the base domain of the resulting cluster. Also take note of the domain name, because you are gonna need to configure the DNS server to resolve this domain name to the load balancer.

For example:

Cluster name = mycluster

Cluster base domain = mydomain.com

The resulting base domain of the cluster will be mycluster.mydomain.com

My plan is to let the VMs get their IP Addresses from my router through DHCP, so I am selecting DHCP option for host's network configuration as shown below.

11. On the next page, there are optional operators that you can install. I don't need them for this installation so I will not select any.

12. On the third page, this is where you define the hosts/node. Click the Add Host button. You may also paste your SSH public key here so that you can SSH to the nodes for debugging later. Once all set, then click the Generate Discovery ISO. This will generate an ISO image.

13. Wait for the ISO to be generated, then download it. This will be the image that you will use to boot the VMs.

14. While waiting for the ISO to be downloaded, revisit the CentOS Stream installation on the openshift-services VM. By this time, the installation should have been completed and you just need to reboot the system.

15. Once the OpenShift Discovery ISO is downloaded, upload it to Proxmox local disk so that we can use it as the bootable installer for our VMs.

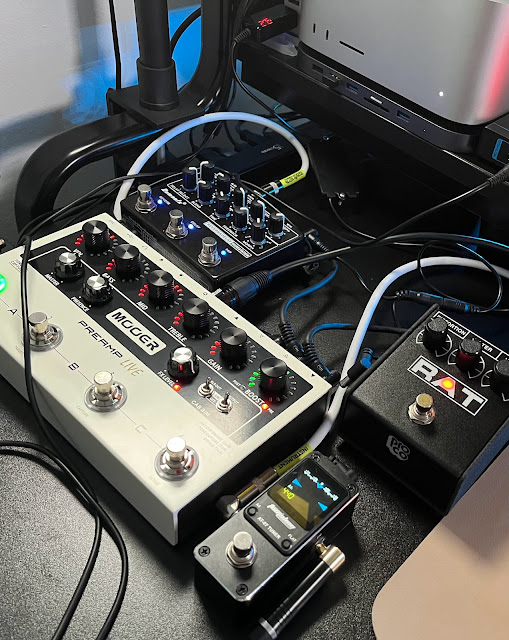

16. Once uploaded, let's start creating the VMs for our OpenShift cluster. We will be creating a total of 5 VMs, 3 for master nodes and 2 for worker nodes. You can choose to create more worker nodes as you wish.

Prepare the VMs according to the spec below.

Both Master and Worker Nodes:

CPU: 8

Memory: 16 GB

Disk: 250 GB

Use the ISO image you downloaded as the image for the DVD drive. In the above screenshot, it is the value of the ide2 field.

17. After creating the VMs, do not start the VMs yet as we still need to install the supporting services in the openshift-services VM. Your list of VM should look something like the screenshot below.

18. Using your router's built-in DHCP server, assign IP addresses to your VMs through the address reservation feature. This requires mapping MAC Addresses to IP Addresses. Do this before starting the VMs. Here is an example mapping I used in my router setup. I used x.x.x.210 as the IP address of the openshift-services. It is important to take note of this address because this will be the load balancer IP and DNS server IP.

DNS Server

19. Now let's install and configure a DNS Server in openshift-services VM. You need to SSH to the openshift-services VM.

OpenShift cluster requires a DNS server. We will use the openshift-services as the DNS server by installing Bind (a.k.a. named) to it.

Bind can be installed by running the following command inside openshift-service VM.

dnf install -y bind bind-utils

After installing, run the following command to enable, start and validate the status of named service.

systemctl enable named

systemctl start named

systemctl status named

Once we validated the installation, we need to configure the named service according to the IP addresses and DNS name mapping that we want to use for our worker nodes.

20. Configure the DNS Server so that the names will resolve to the IP addresses that we configured in the router's DHCP address reservation (step 9). I have prepared a named configuration for this setup which is available in GitHub at https://github.com/rossbrigoli/okd4_files. So let's get these files and configure our DNS server. Note that you can choose to use your own DNS server configuration.

git clone https://github.com/rossbrigoli/okd4_files.git

21. I have prepared a script that will edit the files according to the chosen cluster name and base domain name. These should be the same names as the ones you have chosen when downloading the OpenShift image from the Red Hat Hybrid console. In the same directory, run the setdomain.sh script.

cd okd4_files

./setdomain.sh mycluster mydomain.com

This script will edit the named configuration files in place.

22. Copy the named config files to the correct location. Ensure that the named service is running after the restart.

sudo cp named.conf /etc/named.conf

sudo cp named.conf.local /etc/named/

sudo mkdir /etc/named/zones

sudo cp db* /etc/named/zones

sudo systemctl restart named

sudo firewall-cmd --permanent --add-port=53/udp

sudo firewall-cmd --reload

sudo systemctl status named

23 . Now that we have a DNS name server running. We need to configure the router to use this as the primary DNS server so that all the DHCP clients (all VMs) will get configured with this DNS server as well. Depending on your router, the process of changing the primary DNS server may be a little different. Here's how it looks in mine.

24. Reboot the openshift-services VM, at the next boot it should get the new primary DNS from DHCP server. Run the following command to check if you can resolve DNS names.

nslookup okd4-compute-1.mycluster.mydomain.com

If you followed my DHCP address reservation configuration and DNS configuration, you should get a response with the IP address 192.168.1.1.204.

Load Balancer

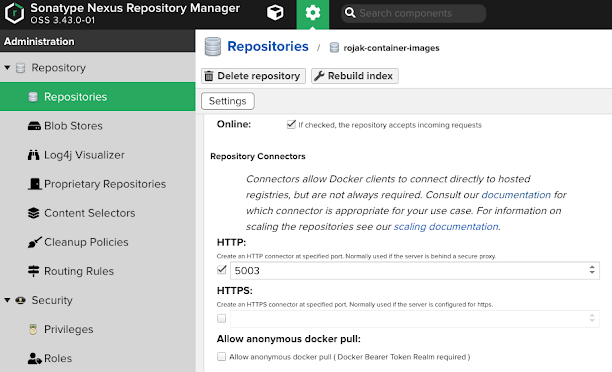

The other thing we need to do is that we need to route the incoming traffic to the OpenShift nodes using the HA proxy load balancer. OpenShift is also using HA Proxy internally.

25. On openshift-services VM, install HAProxy by running the following command.

26. Copy the haproxy config files from the okd4_files directory we got from git earlier and then restart the service. Normally you would use a separate load balancer for control-plane traffic and the workload traffic. However, for simplicity, in this example, we are using the same load balancer for both traffics.

cd

sudo cp okd4_files/haproxy.cfg /etc/haproxy/haproxy.cfg

sudo setsebool -P haproxy_connect_any 1

sudo systemctl enable haproxy

sudo systemctl start haproxy

sudo systemctl status haproxy

27. Open up firewall ports so that HA Proxy can accept connections.

sudo firewall-cmd --permanent --add-port=6443/tcp

sudo firewall-cmd --permanent --add-port=22623/tcp

sudo firewall-cmd --permanent --add-service=http

sudo firewall-cmd --permanent --add-service=https

sudo firewall-cmd --reload

28. Now that we have a load balancer pointing to the correct IP Addresses, start all the VMs.

29. After starting the VMs, go back to Red Hat Hybrid Cloud Console https://console.redhat.com. In the cluster that you created earlier, the VM/hosts should start coming up in the list as shown below.

30. You can then start assigning roles to these nodes. I assigned 3 of the nodes as control-plane nodes (masters) and 2 of the nodes as compute (worker) nodes. You can do this by clicking the values under the role column.

31. Click the Next buttons until you reach the Networking options page. Because we manually configured our load balancer and DNS server, we can select the User-Managed Networking option as shown below.

32. On the Review and Create page, click Install Cluster. This will start the installation of OpenShift to your VMs. You will be taken to the installation progress page, and from here, it's a waiting game.

This process will take around ~40 minutes to an hour depending on the performance of your servers. During the course of installation, the VMs will reboot several times.

There may be errors along the way but they will self-heal. If you find a pod that is stuck, you can also delete it to help the installation heal faster.

Post Installation

Once the Cluster installation is complete. Ensure that your computer can resolve the base domain you used to the IP address of the openshift-services so that you can access the cluster's web console and the Kubernetes APIs through the CLI.

You need to configure the following host mapping.

mycluster.mydomain.com should resolve to 192.168.1.210

*.mycluster.mydomain.com should resolve to 192.168.1.210

api.mycluster.mydomain.com should resolve to 192.168.1.210

You can add this in your /etc/hosts file or change the primary DNS server of your client computer to the IP address of openshift-services, 192.168.1.210, where the DNS server is running. On a Windows machine, the hosts file is in C:/Windows/System32/etc.

Alternatively, you can visit https://console.redhat.com, navigate to OpenShift menu, and select the cluster you just created. Then click the Open console button.

OpenShift CLI

At this point, you won't be able to log in to the web console because you do not have the kubeadmin user's password and have not created any other user yet. However, you can access the cluster from the CLI by using the kubeconfig file you downloaded earlier.

To access the cluster, we will need to install the OpenShift Client called oc.

34. After downloading, extract the tar file and copy kubectl and oc to an executable path. I am using Mac OS, so the following command lines work for me after downloading the tar file.

tar -xvzf ~/Downloads/openshift-client-mac.tar.gz

cp ~/Downloads/openshift-client-mac/oc /usr/local/bin

cp ~/Downloads/openshift-client-mac/kubectl /usr/local/bin

On MacOS, you might be prompted to allow this app to access the system directories. You need to grant it permission for it to work properly.

35. In the Red Hat Hybrid Cloud Console, download the kubeconfig file, this holds a certificate to authenticate to your OpenShift cluster's API. Navigate to Clusters > your cluster name > Download kubeconfig.

This will download a kubeconfig file. Now to access your cluster you need to set an environment variable KUBECONFIG with a value which is a path pointing to this kubeconfig file. The below example command works on Mac OS.

cp ~/Downloads/kubeconfig-noingress ~/

export KUBECONFIG=~/kubeconfig-noingress

36. To confirm if you can access the cluster run the following oc command.

This will list down all the nodes of your newly created OpenShift cluster.

Note: Even before the installation is 100% complete. You can start accessing the cluster. This is useful to see the progress of installation. For example, you can run the following command to check on the status of the Cluster Operators.

If the installation goes well, you should see the following results. All operators should be Available=True.

Note: if you see errors in one or more Cluster Operator status, you can try to delete the pods of those operator, this will trigger self healing and help to un-stuck the installation process.

37. At the end of the installation, you will be prompted with the kubeadmin password. Copy this password and use it to access the OpenShift Console.

Using the username kubeadmin and the password you copied from the installation page of the Red Hat's Hybrid Cloud Console, you should be able to login to your OpenShift cluster.

Voila! Congratulations! You have just created a brand new OpenShift cluster.

There are also post-installation steps that need to be done such as configuring the internal image registry and adding an identity provider to get rid of the temporary kubeadmin login credentials. I will not cover this in this post because this is very well documented in the Openshift Documentation.